Exploring the Power of Immersion Cooling in High-Performance Computing

In the rapidly evolving digital age, High-Performance Computing (HPC) has emerged as a cornerstone of technological advancement, powering complex computations

4479 Desserte Nord Autoroute 440, Laval, QC H7P 6E2

In recent years, the tech industry has been grappling with a significant GPU shortage. This scarcity has been widely attributed to a surge in demand for high-performance computing, driven by sectors such as gaming, cryptocurrency mining, and artificial intelligence. However, the issue is not as straightforward as it seems. While demand has indeed skyrocketed, the shortage is not merely a matter of insufficient supply. Instead, it’s a symptom of a deeper, more complex problem.

The Real Issue: Choosing the Right GPU for Your Needs

The crux of the issue lies not in the quantity of GPUs available, but rather in the selection of the right GPU for specific tasks. The market is flooded with a variety of GPUs, each designed with different capabilities and for different purposes. However, the challenge lies in understanding which GPU is the most efficient and cost-effective for your specific needs.

In the realm of artificial intelligence (AI), this challenge becomes even more pronounced. AI applications, particularly those involving machine learning and deep learning, require substantial computational power. However, not all GPUs are created equal when it comes to these tasks. The key to navigating the GPU shortage and achieving optimal performance in AI applications is therefore not simply to find any available GPU, but to find the “right-sized” GPU.

In the following sections, we will delve deeper into the concept of a “right-sized” GPU, explore the inefficiencies of current GPU usage in AI, and provide guidance on how to select the most suitable GPU for your AI applications. Whether you’re a seasoned AI developer or a newcomer to the field, this guide will equip you with the knowledge you need to make informed decisions in the face of the ongoing GPU shortage.

When it comes to AI, particularly in the realm of machine learning and deep learning, GPUs are often the go-to choice for their parallel processing capabilities. However, there are hidden inefficiencies in the way GPUs are currently used in AI. One of the most significant inefficiencies lies in the mismatch between the GPU’s capabilities and the requirements of the AI application. Often, high-end GPUs are used for tasks that could be efficiently handled by less powerful, and consequently less expensive, GPUs. This mismatch not only leads to unnecessary expenditure but also exacerbates the GPU shortage by increasing demand for high-end GPUs.

The selection of GPUs is often influenced by marketing narratives that promote the most powerful and expensive GPUs as the best choice for all AI tasks. This narrative, while beneficial for GPU manufacturers, can mislead consumers into believing that they need the highest-end GPUs for their AI applications. In reality, the “right-sized” GPU depends on the specific requirements of the AI task at hand.

High-end GPUs like the A100s and H100s are often touted as the gold standard for AI applications. However, these GPUs are scarce and expensive, making them inaccessible for many developers and small businesses. This scarcity is not just a result of high demand, but also of production constraints and market dynamics.

Despite the marketing narratives and the scarcity of high-end GPUs, there is a vast untapped potential in consumer-grade GPUs. These GPUs, which are more readily available and affordable, can handle a wide range of AI tasks efficiently. By understanding the specific requirements of their AI applications and choosing the “right-sized” GPU, developers can optimize their resources, reduce costs, and mitigate the impact of the GPU shortage. In the following sections, we will delve deeper into how to select the “right-sized” GPU and explore real-world examples of efficient GPU usage in AI.

Navigating the GPU landscape can be a daunting task, especially given the variety of options available and the technical jargon associated with them. However, by understanding your application’s needs and the GPU requirements for different stages of an AI model’s lifecycle, you can make an informed decision. Here’s a step-by-step guide to help you in this process.

AI models typically go through two main stages: training and inference. Each stage has different GPU requirements.

By understanding these stages and their requirements, you can choose the right GPU for each stage, optimizing your resources and potentially saving costs. Remember, the “right-sized” GPU is not always the most powerful or expensive one, but the one that best meets the needs of your application.

Training AI models is a complex process that involves feeding data through algorithms to create a model that can make accurate predictions. This process is computationally intensive and requires significant resources. Let’s take a closer look at the demands of this stage and the role of high-powered GPUs and network connections.

The training stage of AI models involves processing large amounts of data, running complex algorithms, and continuously adjusting the model based on the output. This process requires a high level of computational power.

For instance, training a deep learning model can involve billions of calculations. These calculations are often matrix multiplications, a type of operation that GPUs excel at due to their parallel processing capabilities.

Moreover, the size of datasets used for training can be enormous. For example, a common dataset for image recognition tasks, ImageNet, contains over 14 million images. Processing such large datasets requires a GPU with a large memory capacity.

High-powered GPUs play a critical role in the training stage due to their ability to perform many calculations simultaneously. This parallel processing capability makes them significantly faster than CPUs for training AI models.

GPUs with a large number of cores and high memory bandwidth can process more data at once, reducing the time it takes to train a model. For example, the NVIDIA A100 GPU, a popular choice for AI training, has 6,912 CUDA cores and a memory bandwidth of 1.6 TB/s.

In addition to powerful GPUs, high-speed network connections are also crucial during the training stage. They allow for faster data transfer, which is particularly important when training models on distributed systems where data needs to be shared between multiple GPUs.

In conclusion, the training stage of AI models is a resource-intensive process that requires powerful GPUs and high-speed network connections. However, it’s important to remember that not all training tasks require the most powerful GPUs. Understanding the specific requirements of your training task can help you select the “right-sized” GPU and optimize your resources.

Once an AI model has been trained, the next step is to deploy it to make predictions on new data. This process, known as inference, has different requirements than the training stage. Let’s explore the importance of scalability and throughput in AI model serving, the difference in GPU requirements between training and serving, and the inefficiency of using the same GPUs for both tasks.

In the context of AI model serving, scalability refers to the ability of the system to handle increasing amounts of work by adding resources. As the demand for AI predictions increases, the system should be able to scale to meet this demand.

Throughput, on the other hand, refers to the number of predictions that the system can make per unit of time. High throughput is crucial for applications that need to make a large number of predictions quickly, such as real-time recommendation systems or autonomous vehicles.

While training AI models is a computationally intensive process that often requires high-end GPUs, serving models is typically less demanding. The inference stage involves running the trained model on new data to make predictions, which is less computationally intensive than the training process.

As a result, the GPU requirements for serving models are often lower than for training. Consumer-grade GPUs, which are more affordable and readily available, can often handle the demands of serving models efficiently.

Given the difference in GPU requirements between training and serving, using the same high-end GPUs for both tasks can lead to inefficiencies. High-end GPUs may be underutilized during the serving stage, leading to unnecessary expenditure and resource waste.

Moreover, using the same GPUs for both tasks can exacerbate the GPU shortage by increasing the demand for high-end GPUs. By understanding the different GPU requirements for training and serving, organizations can optimize their GPU usage, save costs, and contribute to alleviating the GPU shortage.

In conclusion, serving AI models efficiently requires an understanding of the specific demands of the inference stage and the ability to select the “right-sized” GPU for the task. By doing so, organizations can ensure high scalability and throughput while optimizing their resources.

To illustrate the concepts discussed so far, let’s look at two real-world examples that demonstrate the efficiency of using the “right-sized” GPU for AI tasks.

In a recent project, a team of AI researchers aimed to generate a large number of images using a deep learning model. Initially, they used high-end GPUs for the task, following the common belief that more powerful GPUs would deliver better performance.

However, they soon realized that the high-end GPUs were not fully utilized during the image generation process. They decided to switch to consumer-grade GPUs, which were more affordable and readily available.

The result was astounding. The team was able to generate more images per dollar spent on consumer-grade GPUs compared to high-end GPUs. This case study demonstrates the potential of consumer-grade GPUs for AI tasks and the importance of choosing the “right-sized” GPU based on the specific requirements of the task.

Stable Diffusion SDXL is a state-of-the-art AI model used for generating high-quality images. Serving this model requires a balance between computational power and cost efficiency.

A team of engineers decided to use a mix of high-end and consumer-grade GPUs to serve the model. They used high-end GPUs for the most computationally intensive parts of the model and consumer-grade GPUs for the less demanding parts.

This approach allowed them to serve the model efficiently while keeping costs under control. The success of this project underscores the importance of understanding the GPU requirements of different parts of an AI model and selecting the “right-sized” GPU for each part.

These examples highlight the potential of using the “right-sized” GPU for AI tasks. By understanding the specific GPU requirements of your AI tasks, you can optimize your resources, reduce costs, and achieve better results.

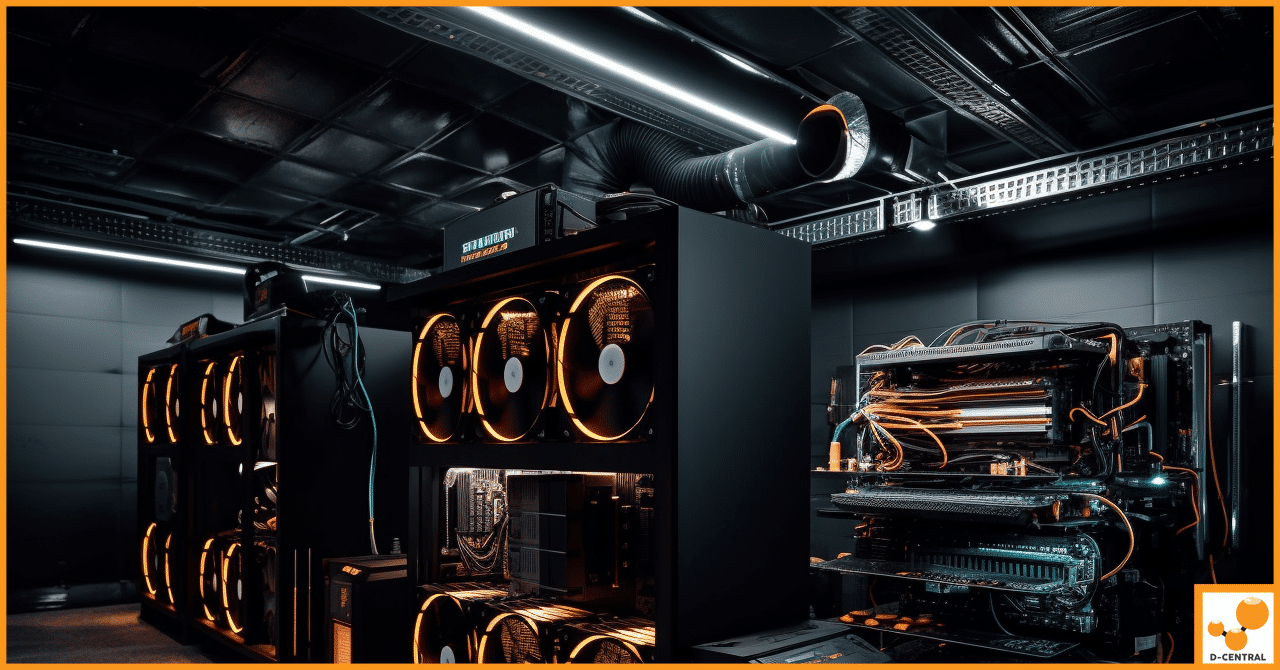

While GPU mining and AI model serving may seem like disparate fields, they share several similarities. Both require a deep understanding of GPU capabilities, efficient resource management, and the ability to navigate the complex GPU landscape. D-Central, with its extensive experience in GPU mining, is uniquely positioned to help clients leverage these parallels for their AI needs.

GPU mining, like AI model serving, requires the efficient use of GPU resources. Both fields involve running complex computations on GPUs and optimizing performance based on the specific requirements of the task. Moreover, both fields require an understanding of the GPU market, including the availability and pricing of different GPUs.

D-Central’s extensive experience in GPU mining has given us a deep understanding of the GPU landscape. We know the capabilities of different GPUs, how to optimize their performance, and where to find the best deals. Moreover, our wide network includes connections with GPU farms that are repurposing their computing power for AI tasks.

Whether you’re looking for the right GPU for your AI application, need help optimizing your GPU usage, or are considering repurposing GPU farms for AI, D-Central can provide the guidance and support you need.

Throughout this article, we’ve explored the complexities of the GPU landscape and the importance of selecting the “right-sized” GPU for your AI tasks. Whether it’s training an AI model or serving it, each task has unique requirements that need to be met for optimal performance and cost-efficiency.

We’ve also highlighted the hidden inefficiencies in GPU usage in AI and how marketing narratives can influence GPU selection. Moreover, we’ve delved into real-world examples that demonstrate the potential of using consumer-grade GPUs for AI tasks and the success of repurposing GPU farms for AI.

Navigating the GPU landscape can be a daunting task, but you don’t have to do it alone. At D-Central, we leverage our extensive experience in GPU mining and our wide network to provide expert guidance and support in AI model serving.

Whether you’re looking to select the right GPU for your AI application, optimize your GPU usage, or repurpose GPU farms for AI, we can provide the support you need. Contact us today to learn more about how we can help you navigate the GPU landscape and make the most of your AI initiatives. Remember, in the world of AI, the “right-sized” GPU can make all the difference.

What is the current GPU shortage in the tech industry?

The current GPU (Graphics Processing Unit) shortage in the tech industry is due to a surge in demand driven by sectors like gaming, cryptocurrency mining, and AI. However, it’s more complex than just a matter of supply, as it’s also an issue of selecting the right GPU for specific tasks.

What does it mean to find the “right-sized” GPU?

Finding the “right-sized” GPU refers to choosing a GPU that is most efficient and cost-effective for your specific needs. Not all tasks require high-end GPUs

and using a high-end GPU for tasks that could be handled by less powerful, cheaper GPUs can lead to unnecessary expenditure and exacerbate the GPU shortage.

How do consumer-grade GPUs compare to high-end GPUs like the A100s and H100s in AI applications?

High-end GPUs like A100s and H100s are often promoted as the best for AI applications but they are expensive and scarce. Consumer-grade GPUs, though more affordable and readily available, can handle a wide range of AI tasks efficiently if the specific requirements of the AI applications are understood.

How do the requirements for GPUs differ between the training and inference stages of AI models?

Training AI models is a computationally intensive process that often requires high-end GPUs with a large number of cores and high memory bandwidth. The inference stage, where the trained model makes predictions on new data, is less computationally intensive and can often be performed effectively on consumer-grade GPUs.

How can D-Central aid in navigating the GPU landscape for AI model serving?

With their extensive experience in GPU mining, D-Central provides a deep understanding of the GPU landscape. They can offer guidance on selecting the right GPU for specific AI applications, optimizing GPU usage, or even repurposing GPU farms for AI.

DISCLAIMER: D-Central Technologies and its associated content, including this blog, do not serve as financial advisors or official investment advisors. The insights and opinions shared here or by any guests featured in our content are provided purely for informational and educational purposes. Such communications should not be interpreted as financial, investment, legal, tax, or any form of specific advice. We are committed to advancing the knowledge and understanding of Bitcoin and its potential impact on society. However, we urge our community to proceed with caution and informed judgment in all related endeavors.

Related Posts

In the rapidly evolving digital age, High-Performance Computing (HPC) has emerged as a cornerstone of technological advancement, powering complex computations

Situated in the heart of Canada, Quebec stands as a testament to a rich amalgamation of history, culture, and a

Bitcoin mining is the backbone of the Bitcoin network, where miners use powerful computers to solve complex mathematical problems, validating